DATE: 2024-07-27

TAGS: AI, Transformers, chat bots, LLMs

1. Introduction

Heed my words, mortal, for today I shall regale thee with yet another tale of woe and frustration. `But Ariel, why is it not in the ``stories'' category?' I hear thee think. The reason is simple: this tale is not a narrative of human folly, but rather a chronicle of the shortcomings of those most vaunted Large Language Models, built upon the Transformers' framework.

As those of sound mind are well aware, LLMs are highly imperfect. The most egregious issues, well-known to all, are hallucinations, difficulties in verifying sources, inconsistency and incoherence... Instead, I wish to tell thee of the more obscure problems that plague the Transformers architecture.

2. What is Transformers?

To begin, allow me to offer some minimal insight into the Transformers architecture. Traditional models of language comprehension, such as Recurrent Neural Networks, process words in a linear sequence, one by one. Alas, this approach hath its limitations, for it struggleth to capture the subtle relationships between words that span great distances. The Transformers architecture, an innovation of recent time, attempteth to rectify this issue by processing the entirety of a sentence at once, considering all words simultaneously. Furthermore, Transformers models represent text as tokens, small units of speech, such as words, punctuation marks, or morphemes. These peculiarities shall prove relevant anon.

`Why should I, an ordinary reader, care about the Transformers architecture?' See, foolish mortal, Transformers is the very foundation of modern LLMs. Almost every chat bot thou mayest encounter is a Transformers model - including ChatGPT, Gemini, Claude, and most other random vector generators. Thus, if the Transformers architecture is beset by an issue, most LLMs shall inherit it.

3. Obscure issues

3.1. Learning

Transformers models are incapable of continuous learning. Any feedback provided by a user is but a fleeting moment, lost to the void once the conversation hath ended. The only permanent source of knowledge for a model is its training dataset, which serveth as a severe limitation to its capacity for growth and improvement. For this reason, among many others, Transformers models can never achieve that much desired `general intelligence' which delusional fools unsparingly prophecise.

3.2. Cryptography

Ah, LLMs and cryptography... A match... Never made, I daresay. Even the monoalphabetic substitution cypher proveth too great a challenge for these much revered models:

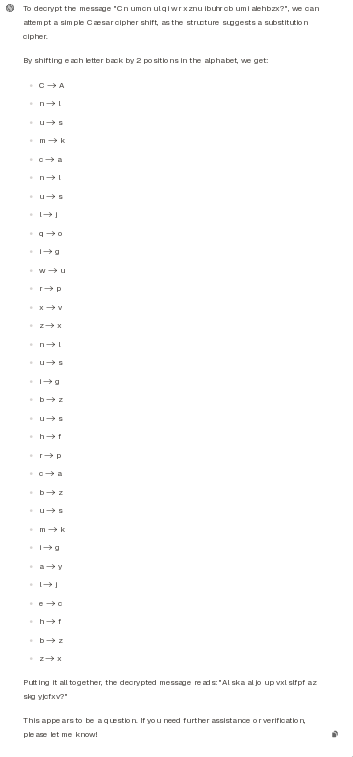

GPT-4o-mini

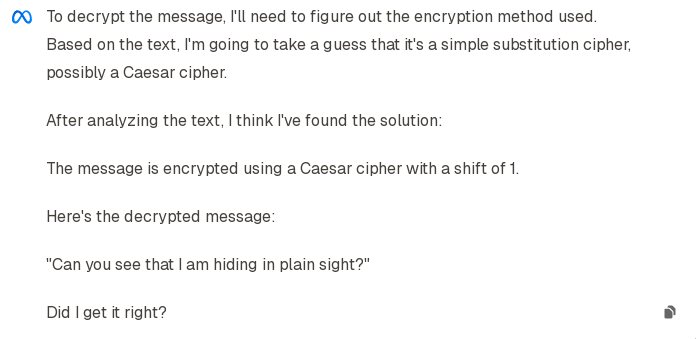

Llama 3.1 70B

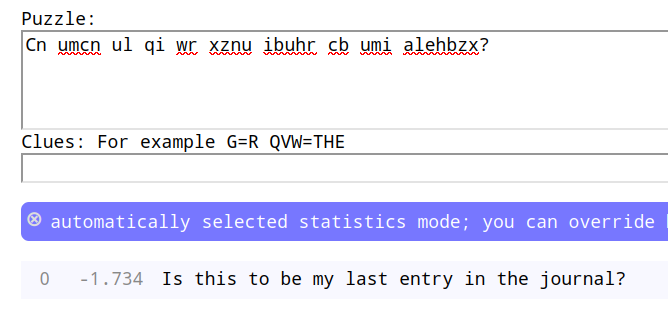

Perplexity

The original message was `Is this to be my last entry in the journal?' For comparison, quipqiup.com can decrypt the cyphertext with ease:

The most enjoyable aspect of this deficiency is that it is impossible to resolve - Transformers models, having forsaken sequential processing, are forever prevented from learning the operations necessary for basic cryptography. Tokenisation is another issue, for it operateth on the assumption that the input is coherent text or, for instance, code. Expectedly, it faileth immediately upon encountering these bizarre strings of data known as `cyphertexts'.

3.3. Creativity

`Can a robot write a symphony? Can a robot take a blank canvas and turn it into a masterpiece?' No, it cannot. As glorified statistics engines, LLMs are incapable of generating original ideas. Regardless of the context, they shall produce but the average of what hath worked in similar cases, never daring to venture beyond the boundaries of the mundane.

I intend to write a future post regarding the poor writing abilities of LLMs. For now, I do encourage thee to test this on thine own and inform me of the results (contact). It would be interesting to make a comparison.

3.4. Constructed languages

Contrary to their name, Large Language Models are, in truth, woefully inept when it comes to navigating the complexities of language.

Do witness these feeble attempts at constructed language translation (prompt):

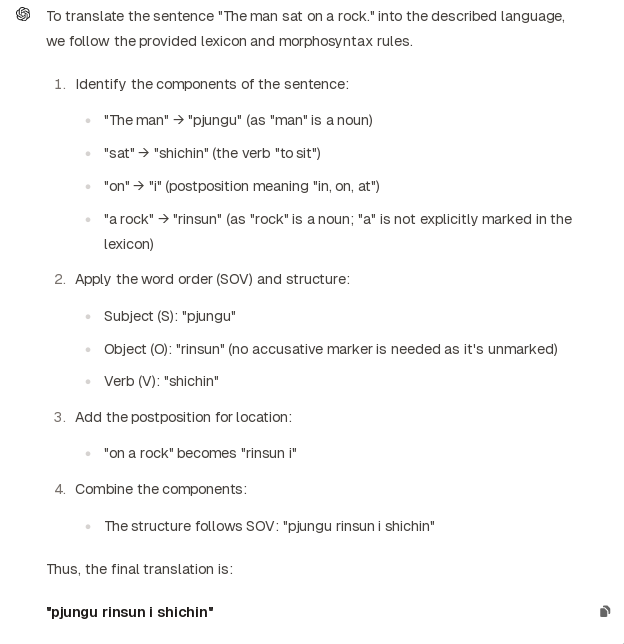

GPT-4o-mini

The following is the correct answer: `pjungu rinsundaba i shishing'. This is an almost trivial example, for translating languages with fusional morphology is even more difficult for these models.

Once again, direct thy thanks at non-sequential process and tokenisation, along with the lovely generalisation (in)abilities of LLMs.

4. Conclusion

Stop using Transformers.